AI Labor Part 1: Leverage

The magnification of gains and losses stemming from leverage is typically symmetrical: a given amount of leverage amplifies gains and losses similarly. - Howard Marks

I spent the last year replacing manual paperwork tasks with AI.

I also heard from people who are terrified and excited about what an AI future means for them as employees - or parents of future employees.

This is an exploration of the near term labor market impact of the current generation of AI systems.

In short: AI will give more leverage to more kinds of workers and this will:

- Make the most trustworthy and emotionally attuned workers better off

- Cause low performing or untrustworthy workers to lose their jobs

- Make a lot of investors better off by boosting margins and assets prices

Buckle up.

Defining Leverage

In 2012 Eric Barone couldn't find a job as a programmer and was working as a part-time theater usher while developing an indie video game that would become known as Stardew Valley.

His game, which took 4 years for him to develop as a solo creator, went on to become one of the best selling indie games of all time selling over 30m copies.

I bring this up because it's a beautiful juxtaposition of two roles carried out by the same person sitting on the opposite ends of the leverage spectrum.

Both game developer and theater usher are responsible for helping entertain people. Yet their leverage couldn't be more different.

As a theater usher you have to be physically present for every movie showing in order to create value. You can only help a few people find their seat at the same time. The amount of hours you put in is correlated in a sort of 1:1 way with the amount of value you can create. Your work is not scalable, repeatable or automated.

As a game developer you can build something once and distribute it without additional cost - this what economists refer to as zero marginal cost. Once the game was created he could create entertainment for millions of people without needing to put in marginal effort for each person it entertained.

As a rough estimate we will assume he put 8000 hours into creating the game. This would mean he spent roughly one second of game development time for each of the 30m people who enjoyed the game. This one second per person who bought the game gets even better when you consider that reviews of the game state it takes about 150-200 hours to complete playing the game.

Because of the scalability of the product Barone created, in effect, one second of his effort caused hours of enjoyment for a customer. This is leverage.

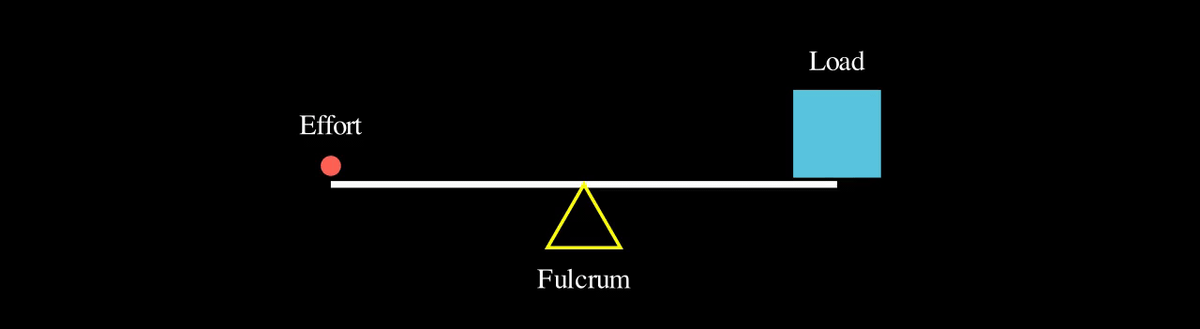

In the original mechanical sense leverage is the ability to apply small forces at the right place to move much larger objects - the more scalable your work is the longer, power powerful your lever to use the physical analogy.

Longer levers provide more leverage - less effort moves more load

This notion has been generalised to mean getting more returns to effort across domains like labor (discussed here) or finance (by using borrowed money).

The most well compensated and well known people in the world all operate with massive leverage.

Copilots vs Autopilots

Technical teams all over the world are racing to implement AI systems to produce better experiences, efficiency, and automation. They want to create leverage – and be compensated for it!

To oversimplify, there are two approaches to deploying AI models in business today:

- Copilot - the AI does work with a human employee with the goal of solving an operational or customer problem

- Autopilot - the AI interacts directly with customers or other departments and no employees are vetting/involved in the systems decisions

A tool that drafts a contract for a lawyer to tweak and send the client is a copilot.

A system that automatically responds to customer service requests is an autopilot.

Given the current maturity of AI models, our integrations with them, and the social norms and regulatory considerations around inserting AI models into important workflows I expect we'll see significantly more "copilots" than "autopilots" in the near future.

Businesses are impressed by the results LLMs can produce - but wary of edge cases where they get things wrong, or potential vulnerabilities or unforeseen issues when presented to the public or customers directly.

This will result in positive returns for workers who can leverage their capabilities – by using AI tools – but also managing relationships and/or providing sound judgement they'll scale themselves.

This won't result in Stardew-valley-scale here for most workers - but more scale than traditional roles.

One analogy to think about is that "everyone is becoming a software engineer"

I mean this in two ways:

- Literally: It's now much easier for anyone to develop code by using AI tools

- Metaphorically: Non-tech workers will be given superpowers by copilots giving them leverage in their daily work that is economically similar to the power of Software engineers over the last 20 years

It's worth noting, though, that because LLMs are very good at helping write and debug code good software engineers will also have their leverage multiplied making them even more valuable than before.

Perhaps another way to think about it is that "everyone is becoming a manager" where we all get to manage set of AIs that can do a lot of the work - but need some human supervision.

The copilot model will allow the best workers in every white collar sector to produce a great deal more output per hour worked.

Based upon some research I've been doing I suspect a 2-3x increase in productivity for many white collar workers should be a reasonable goal over the next 5 years.

Leverage Metrics

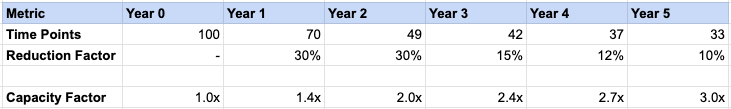

If today a worker spends 100 "time points" - just a way to simplify talking about hours to complete their workload – to complete their job and you can reduce it by 30% in year 1, 30% again in year two, etc. Even if you assume a large drop off in efficiency gains in the following years 2-3x improvement seems within reach:

By year five a worker putting in the same number of hours could produce 3x as much output (think: compliance filings made, contracts drafted, etc).

These estimates come from talking to workers across domains like law, accounting, and financial services and seeing how much of their work involves workflows that could be enhanced.

A huge amount of the effort in many white collar jobs today looks something like this sample flow for a trust and estate lawyer:

- Understanding the needs of the client (low potential to automate)

- Collecting data from the client (high)

- Transforming and normalising data (high)

- Drafting (high)

- Reviewing (mid)

- Communicating back with client (low)

In the domains where there is high potential as much as 80-90% of the work could be offloaded to an AI, while maybe only 10-20% can be done so in the low case.

If you think for most workers 20% is relationship work that can only be reduced by 10%, and 80% of their work is manual workflows that can be reduced by 80% you'll get a calculated value that looks a lot like the "year 5" calculation in the simple model above.

In this domain or another (e.g. completing compliance checks or doing underwriting) there's a "fat middle" of work sitting in between ultimate customer/stakeholder touch points on either end of the process that are ripe for copilot style automation.

Engineer Economics

In 2013 I was in a 1:1 with my manager, an engineering executive at Twitter, talking about his obsession with airplanes. I was new to Silicon Valley and a question popped into my head: "You're so obsessed with aviation... why don't you go work in aerospace?" – he looked at me as though I was a raving lunatic and pointed out that Silicon Valley was the best ROI experience for any worker in the history of humanity. He was right.

That great employment experience is because software engineers have more leverage than any other worker in history. This lead to both better wages and better working conditions than pretty much any other workers in history.

Work output from an engineer within a scalable technology company can be amortized over a massive set of customers. The software that is written can then be re-used millions, billions, or trillions of times with minimal marginal cost - usually just the price of electricity to run servers.

Technology allows small teams to accomplish big things (leverage!):

OpenAI had maybe 300-something employees when they launched ChatGPT.

Instagram built a product used by 30m people with 12 employees when they were acquired by Meta (then Facebook).

The original iPhone was also built by a team of about a dozen people.

When I worked with teams on early versions of Stripe Atlas and Stripe Billing the teams were tiny at the start - maybe 5-6 people. The very first version of Stripe Invoicing was built by one engineer by herself.

Engineers create value that is disconnected from the hours they spend - and they're also often compensated in such a way as well (by taking equity in the companies they work with). This is the path to wealth creation for many in the modern era.

If you want to raise your income in the long run, find a way to divorce your time from your earnings.

— Nick Maggiulli (@dollarsanddata) July 17, 2024

While earning more per hour is great, earning more no matter the hour is better.

Tech companies also compensate their other employees along this model as well - and so a rising tide in Silicon Valley has lifted many boats across the functional spectrum.

AI will broaden the scope for this sort of return. More companies will begin to act like tech companies - both in terms of actual technology creation and usage – and in terms of scalability and profitability. It seems to me that every great company will need to be, at least partially, a technology company in the future. Almost exactly 13 years later Andreessen's Software is Eating the World memo looks more prescient than ever.

Consequences

This means more roles will begin to have characteristics similar to software engineers:

- Top performers can have outsized impact (and compensation)

- Poor judgement from one person can cascade throughout a business

- Compensation may begin to diverge from strict hourly correlation

The upside of having a good employee will increase (so you’ll want to retain them) and the blast radius of a bad employee will increase (so you’ll need to get rid of them).

If you want a good example of the risks of greater employee leverage, look no further than the recent Crowdstrike caused IT meltdown that impacted 8.5m Windows devices around the world and is likely the worst IT incident in history. This was likely caused by a single engineer shipping a bad line of code that didn't get detected.

As we all get more leverage - decision making will become the key factor for humans - either as business people or workers.

Naval makes this point well in the clip below. As the future unfolds we will put an ever greater premium on being healthy - mentally and physically - as this is what it will take to deliver peak performance.

In the age of infinite leverage, judgment is the most important skill. pic.twitter.com/2z3BxagMg4

— Naval (@naval) July 16, 2024

If you're keen to benefit as an employee in the coming years I would:

- Enthusiastically embrace AI tooling

- Think about how to enhance the relationship elements of your work

- Meditate on what "good judgement" means in your domain and how to advertise that you have it

Conclusion

In general I think the future looks bright for a lot of people - maybe most people - as AI gives us all super powers. We'll all be richer as society becomes ever better off. We'll all have tools that can help us plug the gaps in our skillsets.

Longer term we way see more autopilots - and we may see more of the disruption move from white collar data oriented work to physical world work. These changes will roll through out the economy quickly on a generational scale - but slowly on a year to year scale.

There will be time to adjust - and we'll do what we've always done as we become more technologically enabled: Invent wondrous new kinds of economic activity and the jobs that accompany them.

Going forward we should all invest more in our judgement and relationships - it is these human elements that will be durably important to us even as the machines rise.

Up next: AI Labor Part 2: Rollout - how models will get deployed across various jobs. Subscribe for free to get this in your inbox when it's published.

Feedback, ideas, reading suggestions? I'd love to hear from you: n@noahpepper.com